Custom tuned open-source models for Stable Diffusion

Free and open source diffusion models. All models are downloadable from Hugging Face. If you use the models to generate images, please feel free to hashtag them #darkvoyage so we can all see the creations made.

This project and files are offered without charge by Chris Hunter, owner of Collective Thought Media, a full service video marketing firm located in Rhode Island, USA. If you’d like to tip Chris for his work building these tuned models, there is a donation link below. Have more questions? Then read the FAQ.

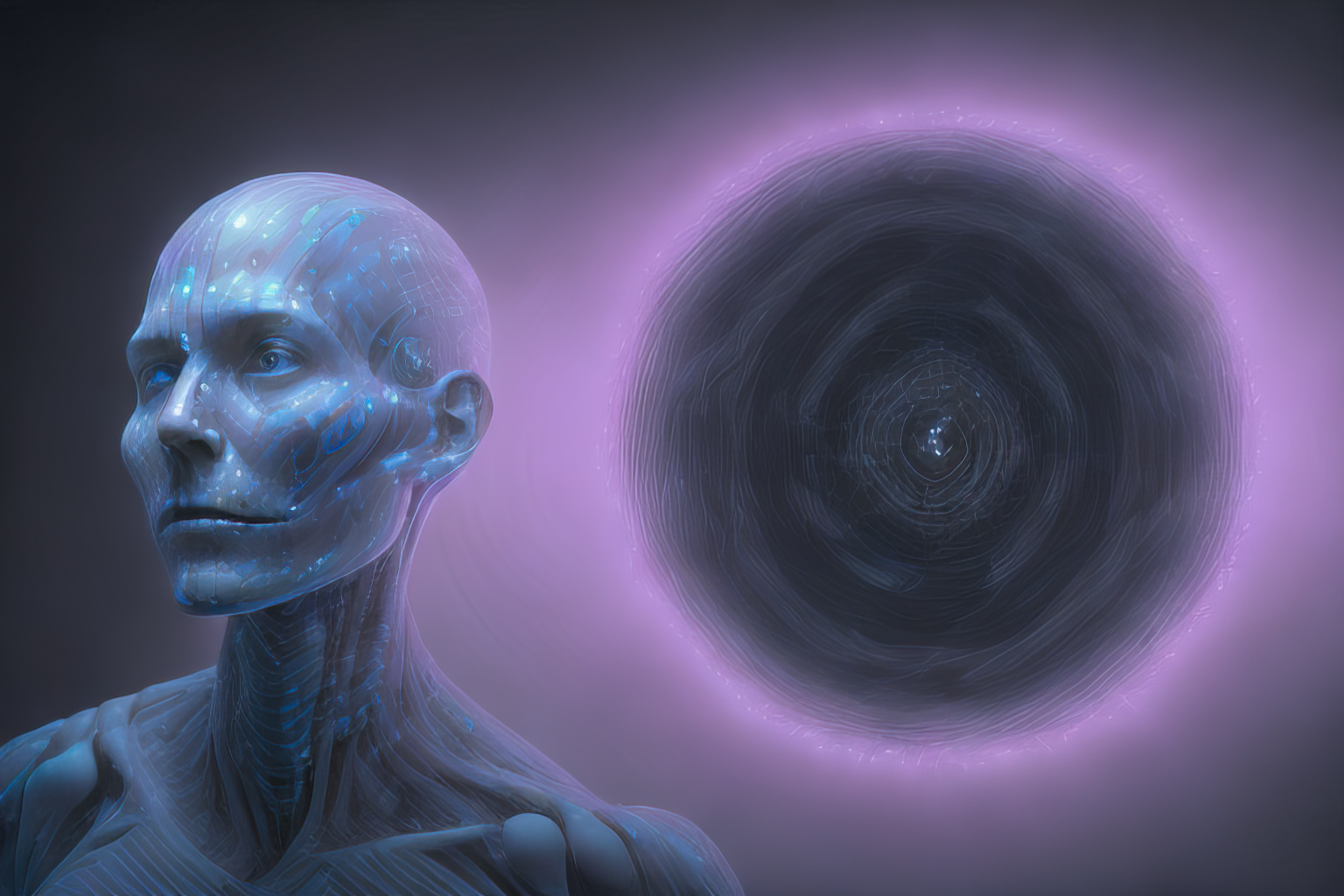

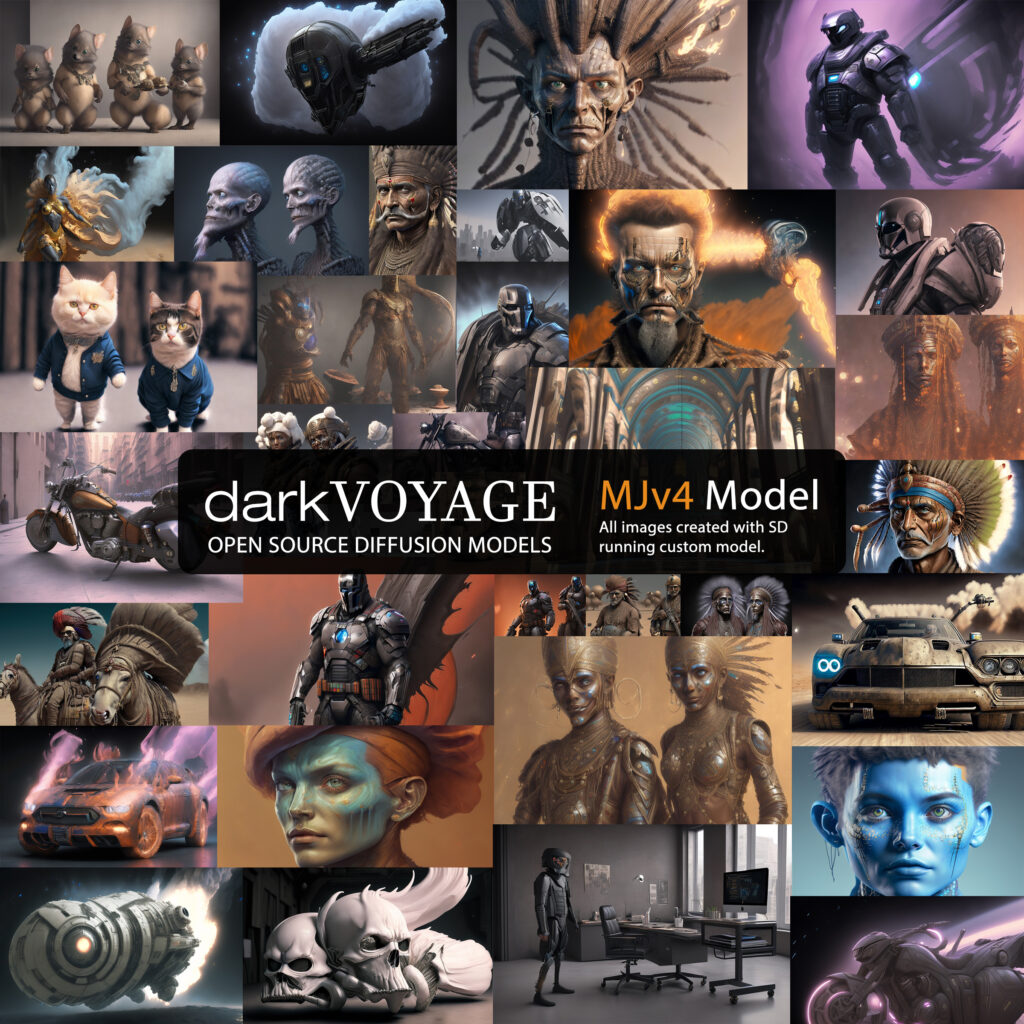

This model was tuned on 77 different Midjourney v4 images. It’s great for creating objects like abstract faces, machines, vehicles, robots, fantasy/sci-fi characters and more. Images were picked from MJ that exhibited excellent composition, lighting and color usage. I mainly use it in the following format: “MJv4 style, describe your subject or scene” Even simple prompts like “MJv4 style, future cyber knight” or “MJv4 style, garden gnome” can create very realistic, dynamic images in SD.

MJv4 – v1 is the original model trained on 77 reference images. I feel it works best for objects and mechanical renders. Use the phrase “MJv4” or “MJv4 style” near the beginning of your prompt. Download it below:

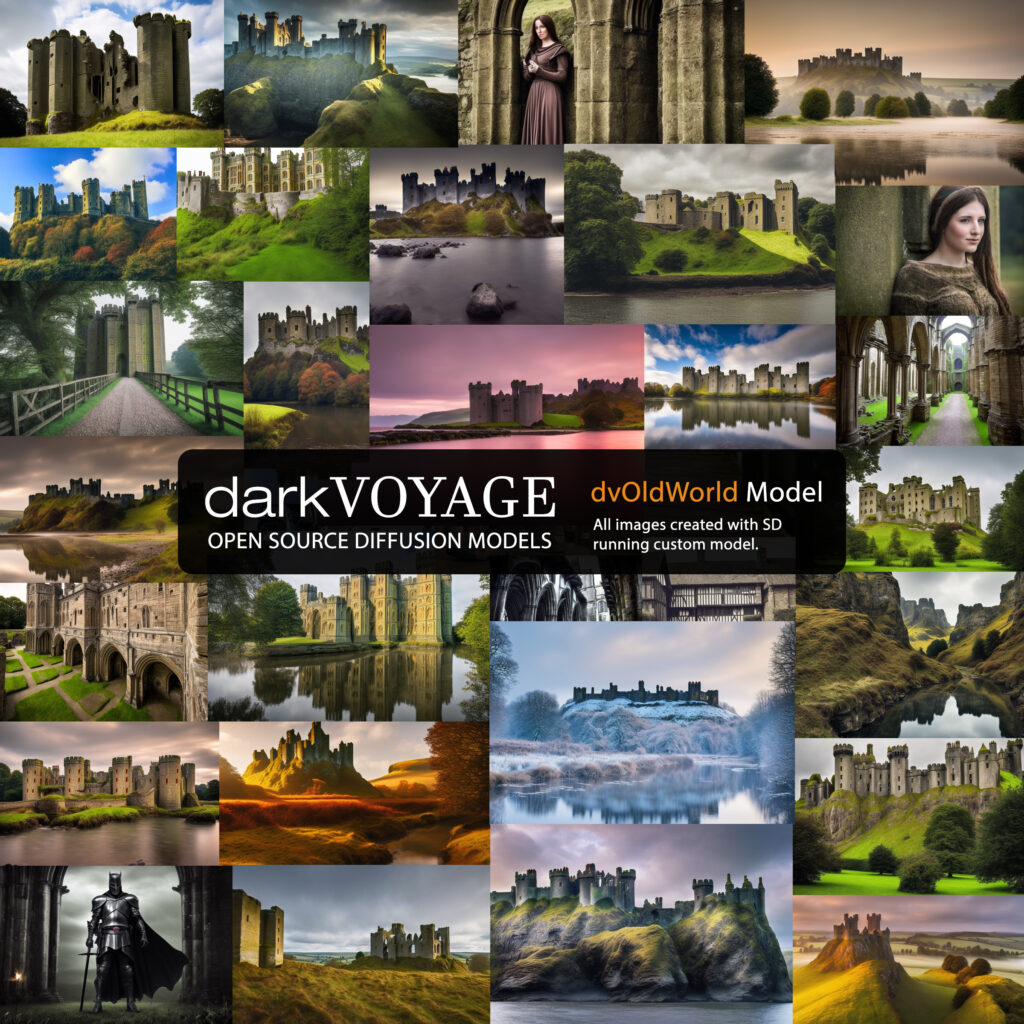

This model was tuned on 28 different images of castles and UK landscapes. It’s great for creating western European landscapes, in particular the training images represented the architecture, weather and general aesthetics of old world UK countryside. The tuned model is great for generating castles, ruins, cathedrals, and anything with an old world feel. It’s also great for inserting objects / people into that type of environment. The phrase used to call the style/place is “dvOldWorld” – make sure that appears near the beginning of your prompt.

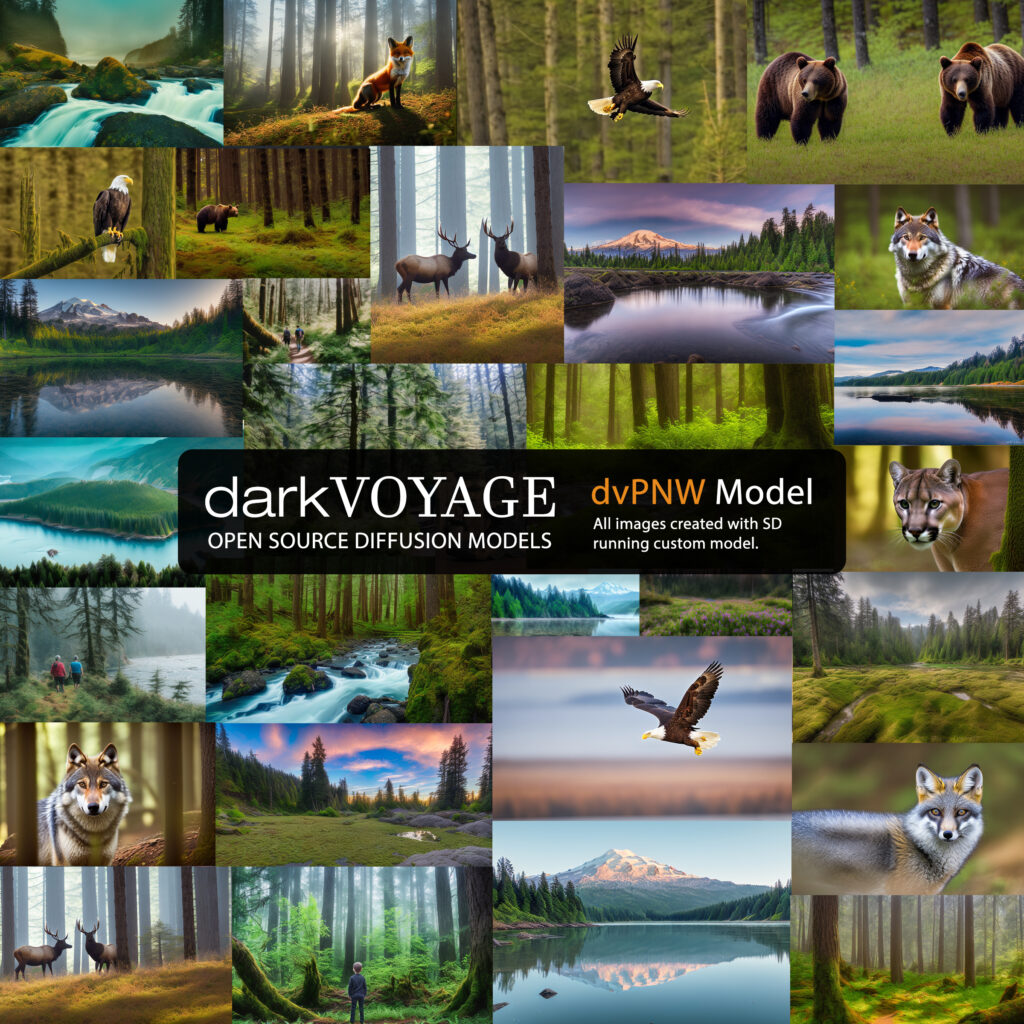

This model was tuned on 77 different images of Pacific Northwest landscapes. It’s great for creating landscapes, in particular the training images represented the forests, terrain and fauna of the Pacific Northwest. The tuned model is great for generating rainy images, lush fern forests, and wildlife images. It’s also great for inserting objects / people into that type of environment. The phrase used to call the style/place is “dvPNW” – make sure that appears near the beginning of your prompt.

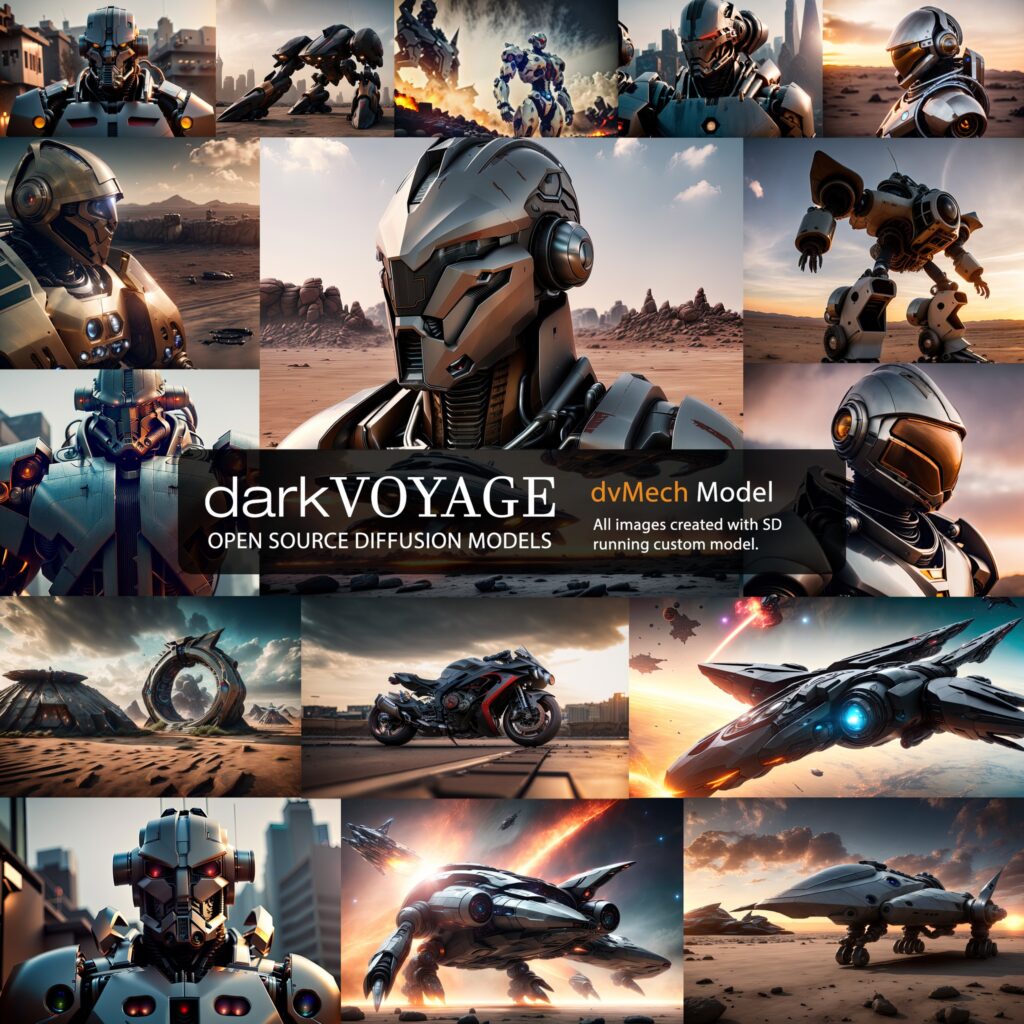

This model was tuned on 40 different images of mecha, robots, machines and a few automobiles. It’s great for creating stylized robots, mech warriors, power armor and machine objects. The phrase used to call the style/place is “dvMech” – make sure that appears near the beginning of your prompt.

This model was tuned on 32 different images of concept, sports and antique cars. It’s great for creating photo realistic cars, and concept cars using only simple prompts. The phrase used to call the style/place is “dvAuto” – make sure that appears near the beginning of your prompt.

FAQ

What are these?

These are custom tuned models, that are designed to speed up the AI art rendering process, by providing hand picked visual references for specific subjects or styles. When the specific identifier is used that the model is tuned on, by using that one phrase Stable Diffusion will utilize the visual references provided in the training. This allows you to fine tune the look of certain subjects, such as a particular place, or certain art style.

How do you make custom tuned models?

These custom tuned models are made using Hugging Face and Fast Dream Booth. Custom tuned models start with a copy of the standard issue Stable Diffusion CKPT file. That checkpoint file is then trained on your specific instance reference images, with the ability to then create AI objects or styles based on the training images.

How do I use these?

Each tuned model has a link to the corresponding Hugging Face Git repository. You simply download the custom model and load it into your GUI for Stable Diffusion. Once you have loaded the model, you call the object or style by using the same word as the name of the CKPT. IE: For the MJv4 Model, the checkpoint file is named “MJv4.ckpt” so you would include the word “MJv4” or “MJv4 style” somewhere near the beginning of your prompt. Another example is dvOldWorld.ckpt, you would use the phrase “dvOldWorld” or “dvOldWorld style” near the beginning of your prompt. You can also use prompts like “xyz character, standing in dvOldWorld” to put a character into a specific tuned environment.

Are the “MJv4” models really as good as Midjourney?

No, of course not. Our models are simply tuned versions of standard Stable Diffusion models, so they do not have any of the style pre-prompting or cohesion that Midjourney team has done such a great job on developing. What the MJv4 models DO allow you to do, is more quickly and easily create AI art that has a similar aesthetic to images also created with Midjourney v4.